Running Small LLMs (oxymoron) Locally With…

Running Small LLMs (oxymoron) Locally With an Integrated GPU on a Laptop.

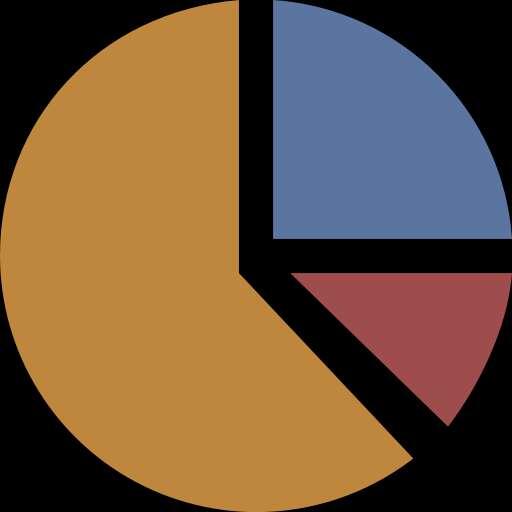

This is what running local models on a consumer-grade laptop without a discrete GPU looks like... Still acceptable for many use cases in my opinion, but I definitely want to upgrade to a machine with a dedicated GPU.

Framework 13

OS: Linux

Hardware: AMD Ryzen 7040Series AMD® Ryzen 7 7840u w/ radeon 780m graphics × 16

Run in Terminal:

ollama run --verbose llama3.2:1b

The "verbose" specification provides the statistics output.

#stats #statistics #education #analytics #econometrics #foss #opensource #learning #nostr #ai #llm #llms #framework #framework13 #frameworklaptop #nostr #foss #fossanalytics #software #linux